42 nlnl negative learning for noisy labels

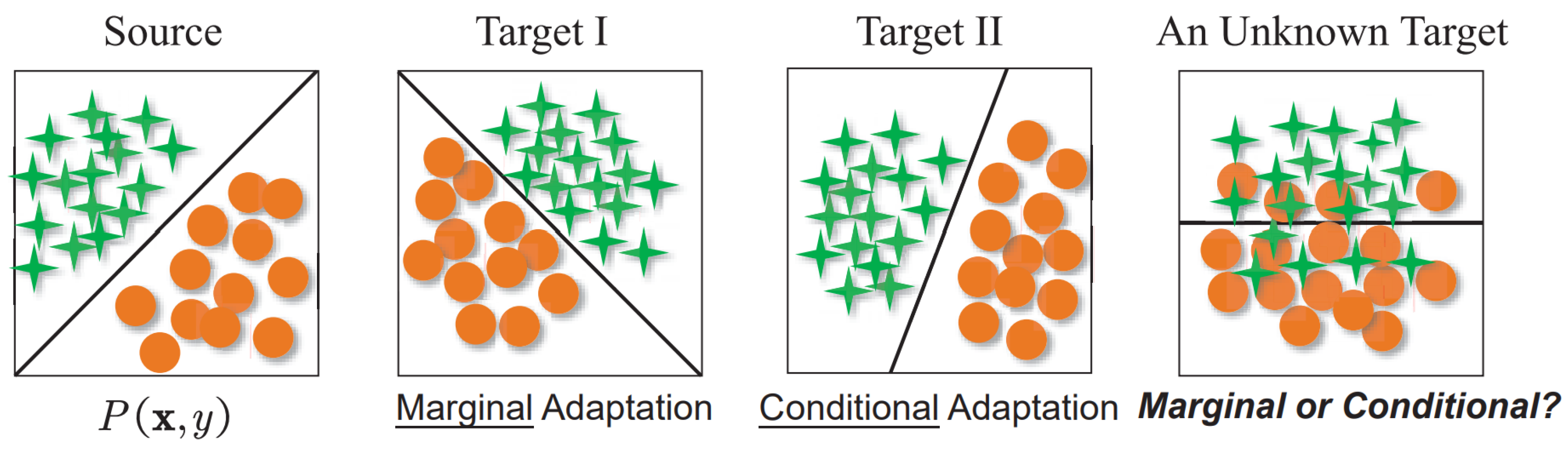

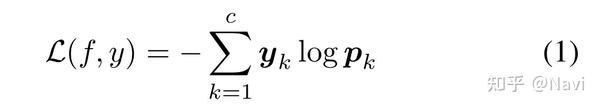

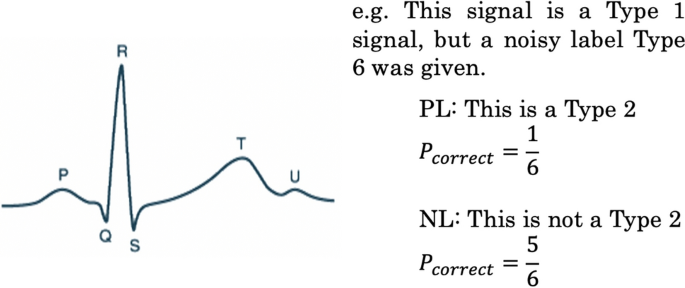

Deep Learning Classification With Noisy Labels | DeepAI It is widely accepted that label noise has a negative impact on the accuracy of a trained classifier. Several works have started to pave the way towards noise-robust training. ... [11] Y. Kim, J. Yim, J. Yun, and J. Kim (2019) NLNL: negative learning for noisy labels. ArXiv abs/1908.07387. Cited by: Table 1, §4.2, §4.4, §5. Joint Negative and Positive Learning for Noisy Labels | DeepAI 3 Negative Learning for Noisy Labels (NLNL) Throughout this paper, we consider the problem of c-class classification. Let x∈X be an input, y,¯¯y∈Y={1,...,c} be its label and complementary label, respectively, and y,¯¯y∈{0,1}c, be their one-hot vector. Suppose the CNN,

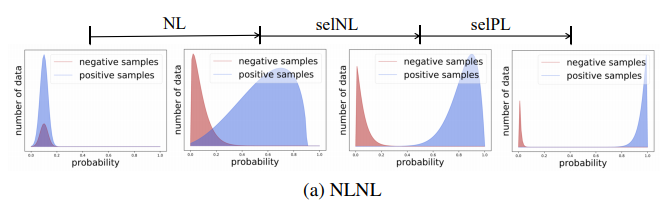

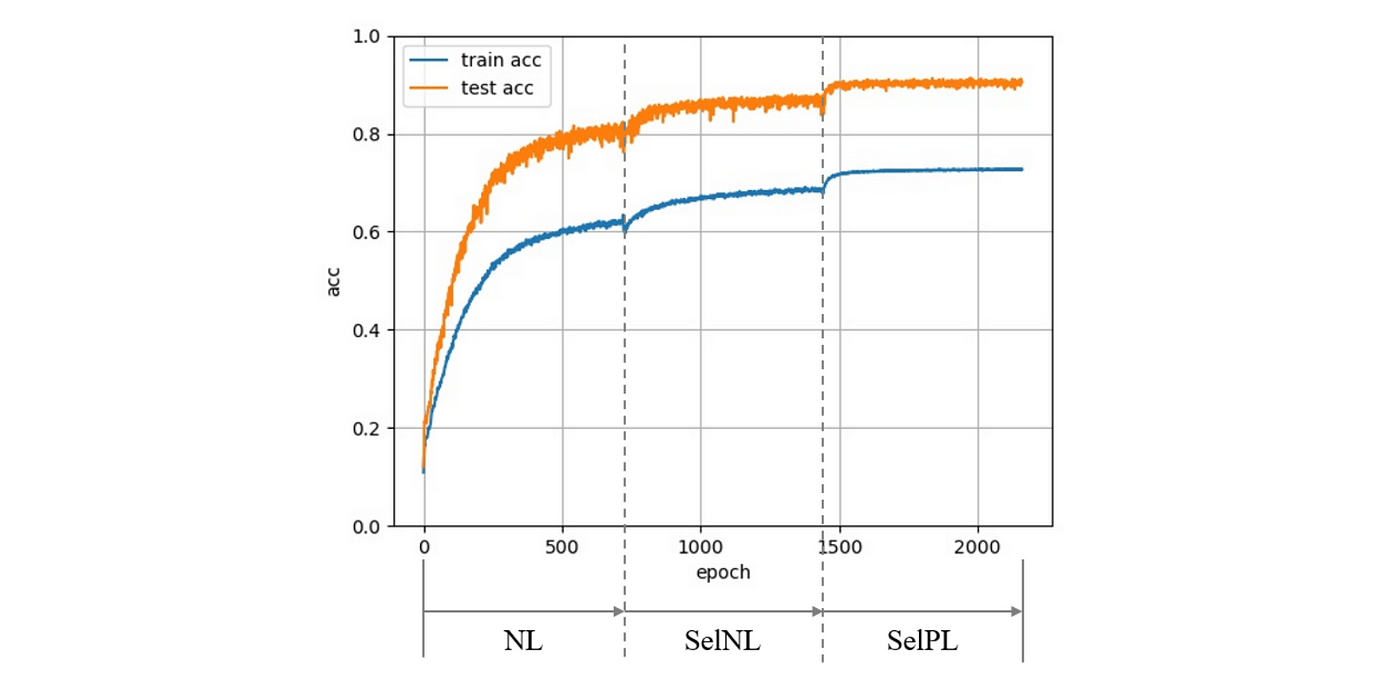

NLNL: Negative Learning for Noisy Labels - IEEE Xplore Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

Nlnl negative learning for noisy labels

NLNL: Negative Learning for Noisy Labels | Request PDF - ResearchGate With simple semi-supervised training technique, our method achieves state-of-the-art accuracy for noisy data classification, proving the superiority of SelNLPL's noisy data filtering ability. Using... PDF NLNL: Negative Learning for Noisy Labels Furthermore, utilizing NL training method, we pro- poseSelective Negative Learning and Positive Learning, (SelNLPL), which combines PL and NL to take full ad- vantage of both methods for better training with noisy data. Although PL is unsuitable for noisy data, it is still a fast and accurate method for clean data. NLNL: Negative Learning for Noisy Labels - NASA/ADS Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

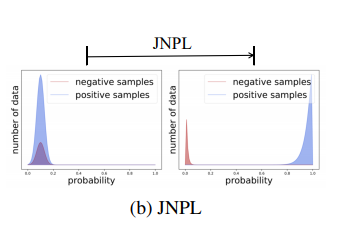

Nlnl negative learning for noisy labels. 【标签噪声】学习标签噪声 | 云开发程序员 2013-NIPS - Learning with Noisy Labels. [Paper] [Code] 2014-ML - Learning from multiple annotators with varying expertise. [Paper] 2014 - A Comprehensive Introduction to Label Noise. [Paper] 2014 - Learning from Noisy Labels with Deep Neural Networks. [Paper] 2015-ICLR_W - Training Convolutional Networks with Noisy Labels. Joint Negative and Positive Learning for Noisy Labels As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we propose a novel improvement of NLNL, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage. NLNL: Negative Learning for Noisy Labels - IEEE Computer Society Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in NLNL: Negative Learning for Noisy Labels - CORE Reader NLNL: Negative Learning for Noisy Labels - CORE Reader

ICCV 2019 Open Access Repository NLNL: Negative Learning for Noisy Labels, Youngdong Kim, Junho Yim, Juseung Yun, Junmo Kim; Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2019, pp. 101-110, Abstract, Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. Joint Negative and Positive Learning for Noisy Labels - Semantic Scholar A novel improvement of NLNL is proposed, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage, allowing greater ease of practical use compared to NLNL. Training of Convolutional Neural Networks (CNNs) with data with noisy labels is known to be a challenge. Based on the fact that directly providing the label to the data (Positive Learning ... Joint Negative and Positive Learning for Noisy Labels As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we propose a novel improvement of NLNL, named Joint Negative and Positive... Joint Negative and Positive Learning for Noisy Labels - NASA/ADS As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we propose a novel improvement of NLNL, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage.

NLNL: Negative Learning for Noisy Labels - CORE Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL). NLNL: Negative Learning for Noisy Labels - Semantic Scholar A novel improvement of NLNL is proposed, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage, allowing greater ease of practical use compared to NLNL. 6, Highly Influenced, PDF, View 5 excerpts, cites methods, Decoupling Representation and Classifier for Noisy Label Learning, NLNL: Negative Learning for Noisy Labels | Papers With Code Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL). NLNL: Negative Learning for Noisy Labels - NASA/ADS Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

PDF NLNL: Negative Learning for Noisy Labels Furthermore, utilizing NL training method, we pro- poseSelective Negative Learning and Positive Learning, (SelNLPL), which combines PL and NL to take full ad- vantage of both methods for better training with noisy data. Although PL is unsuitable for noisy data, it is still a fast and accurate method for clean data.

NLNL: Negative Learning for Noisy Labels | Request PDF - ResearchGate With simple semi-supervised training technique, our method achieves state-of-the-art accuracy for noisy data classification, proving the superiority of SelNLPL's noisy data filtering ability. Using...

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/5-Figure5-1.png)

![2021 CVPR] Joint Negative and Positive Learning for Noisy ...](https://i.ytimg.com/vi/1oSExxg9txY/hqdefault.jpg)

Post a Comment for "42 nlnl negative learning for noisy labels"